Problem

The UK’s nuclear operator, EDF Energy,identified a need to perform a chemical cleaning campaign on critical plant.The objective of this clean was to return the plant to design state while minimising any risks and impacts which may arise from the campaign. As such,detailed simulations were employed by EDF and Ada Mode personnel to define the campaign’s parameters.

In specifying the clean there were a wide range of variables to be considered, many of which could be adjusted between certain limits to optimise the cleaning process. A discrete event simulation method was developed to track how the clean would progress and help to inform decisions on where to fix certain key variables. While this technique was powerful, the effort and time required to run this simulation limited the level of insight which it was able to provide.

In ideal circumstances, the simulation approach would allow for a comprehensive and high-resolution exploration of all possible combinations of input variables. In reality, the simulation was run for a small subset of scenarios, informed by supporting technical judgements. Some sensitivity analysis was also completed, informing a view on the extent to which variation in certain parameters influenced the campaign outcome.

Recognising the potential for improvements to the approach, work was undertaken to explore the potential for employing a surrogate modelling technique which would enable much more rapid and less constrained exploration of the simulation’s multi-dimensional parameter space.

Approach

The design phase of any project has become increasingly important as technology and fundamental infrastructure becomes more complex and inter-dependent. The process is often complex, expensive and time consuming. Requiring the construction of experiments, prototypes or computational simulations alongside finalisation of the design space and quantification of a designs function and performance. Computational advancements have enabled an increase in the complexity and depth that is achievable in simulations(discrete event, FEA, physics based, etc.) and system dynamics models.

However, simulations are not constructed as optimisation tools. They allow for a trial of a design but not a robust exploration of a design space, and hence the discovery of optimal and robust design decisions is often a sticking point. Some simulations are very expensive to complete, causing a trial and improvement approach to be slow and inefficient.Advances in computational performance is leading to more complex simulations rather than faster iterations, only amplifying the problem. Ada Mode are working to break this cycle through the hybridisation of classical simulation with applied machine learning techniques.

Details of Implementation

In the chemical clean simulation, each campaign design focuses on the selection of a minimum of 3 parameters:

· The concentration of injected cleaning agent

· Temperature profile

· Gas flow rate

KPI’s for each simulation output included:

· Campaign duration

· Time required to progress to a critical point in the clean.

An optimal campaign should minimise the concentration of cleaning agent while also minimising both metrics of cleaning time.

In this application, a set of 3525 initial design experiments within the feasible region were assessed using the simulation model. These initial designs were constructed with a uniform grid to broadly cover the feasible region of the design space. A set of AI-enabled surrogate models were trained on the experiment design variables and the performance data. Multiple machine learning approaches were tested employing a range of algorithms of varying complexity from linear regression, decision trees, gaussian processes, and support vector machines. The ML development pipeline incorporated cross validation of performance and tuning relating to feature engineering, hyper-parameter selection and model architecture.

The resultant surrogate models were each able to explain 90% of the variance of the simulation outputs. A key step in the surrogate modelling process is ensuring that the surrogate models are suitably accurate to the simulation. As the surrogate model is a double abstract of the real cleaning process, it is possible to compound errors or inaccuracies already present in the simulation.

The surrogate models were then used to further explore the design space in place of the simulation. The predictive surrogate model can assess campaign designs many times faster than the computational model. As a benefit of the improved computational speed,optimisation techniques can be applied to the multi-objective design problem to find near optimal solutions.

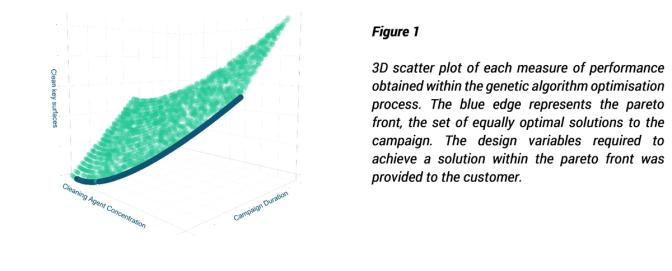

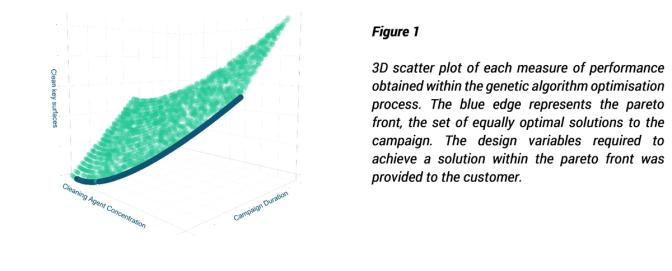

Through use of multi-objective genetic algorithms, we were able to find a set of solutions located on the pareto front. The pareto front is a region of the design space where the solutions present are not out-competed by any other regarding all dimensions of the objective space.

Once the pareto front has been determined, it is required to choose a solution located on the plane that maximally satisfies some utility function defined by key stakeholders. This utility function would consider other practical implications of each solution such as safety, cost and risk and enable the selection of the most appropriate point matching the preferences of the decision makers.

In some applications it may not be feasible to run a suitable number of initial simulations to enable the development of the surrogate model(s). In such cases,it is recommended to use the same principles outlined here but instead apply predictive modelling to define the next set of experiments to carry out.Typically, this is done with gaussian process models and Bayesian optimisation.

Outcome

The surrogate modelling approach applied to the chemical clean discrete event simulation provided a major improvement in computational speed:

· 3525 iterations with surrogate(s) < 1second

· 3525 iterations with simulation ~ 7.5 hours

Implications

In the traditional approach, expert judgements are used to constrain which parameters to vary, which simulations to run, and which sensitivity analyses to carry out. By enabling major improvements in computational speed, surrogate modelling removes the need for such selection. This enables a more ‘brute force’ approach to parameter space exploration and enables simulations to challenge expert judgements and help to improve overall understanding of the problem at hand.

The use of such an approach is especially powerful for complex multidimensional problems where traditional simulations are computationally expensive and/or subject to extensive constraints in order to make implementation feasible.